Artificial intelligence left a trail of mistakes in orders issued by two federal judges, according to Republican Sen. Chuck Grassley of Iowa.

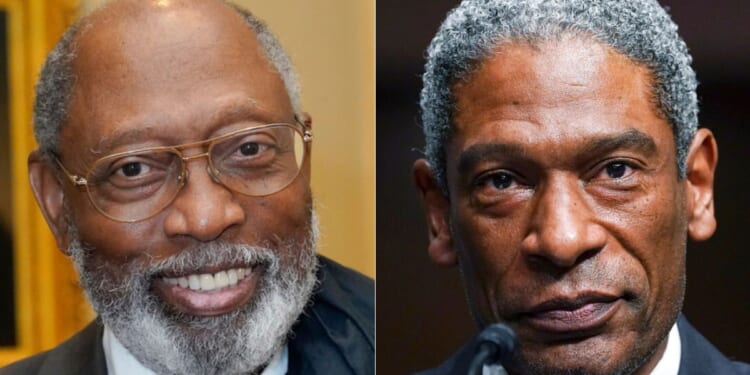

Two U.S. District Court judges — U.S. Southern District of Mississippi Judge Henry Wingate and U.S. District of New Jersey Judge Julien Neals — issued court orders drafted by staff that “misquoted state law, referenced individuals who didn’t appear in the case and attributed fake quotes to defendants, among other significant inaccuracies, according to a release on Grassley’s website.

In both cases, judicial staff injected the use of AI in the process of drafting opinions that were later revoked and replaced with correct ones.

“Honesty is always the best policy. I commend Judges Wingate and Neals for acknowledging their mistakes, and I’m glad to hear they’re working to make sure this doesn’t happen again,” Grassley said

2 federal judges sent me letters admitting their staff used AI to draft error ridden court orders Theyre now working 2ensure it doesnt happen again That’s a step fwd but we need to make sure this problem doesnt resurface in ANY federal court My oversight will continue

— Chuck Grassley (@ChuckGrassley) October 23, 2025

“Each federal judge, and the judiciary as an institution, has an obligation to ensure the use of generative AI does not violate litigants’ rights or prevent fair treatment under the law. The judicial branch needs to develop more decisive, meaningful and permanent AI policies and guidelines,” he continued.

“We can’t allow laziness, apathy or overreliance on artificial assistance to upend the Judiciary’s commitment to integrity and factual accuracy.”

Grassley noted that the Administrative Office of the Courts has issued guidance around the use of AI as its use is expanding at multiple levels in society.

Should judges and their staffers be banned from using AI?

The judges each said they have taken measures to ensure the mistakes do not take place again.

In a response to the Administrative Office of the Courts, Wingate said that in the order in question, “a law clerk utilized a generative artificial intelligence (‘GenAI’) tool known as Perplexity strictly as a foundational drafting assistant to synthesize publicly available information on the docket. The law clerk who used GenAI in this case did not input any sealed, privileged, confidential, or otherwise non-public case information.”

Wingate said the draft was published with the usual reviews.

“The standard practice in my chambers is for every draft opinion to undergo several levels of review before becoming final and being docketed, including the use of cite checking tools. In this case, however, the opinion that was docketed on July 20, 2025, was an early draft that had not gone through the standard review process. It was a draft that should have never been docketed. This was a mistake,” he wrote.

“I have taken steps in my chambers to ensure this mistake will not happen again,” he wrote, noting that in his opinion the cause of the mistake being published was “a lapse in human oversight.”

Neals said in his letter of response that “a law school intern, used CHATGPT to perform legal research.”

“In doing so, the intern acted without authorization, without disclosure, and contrary to not only chambers policy but also the relevant law school policy. My chambers policy prohibits the use of GenAI in the legal research for, or drafting of, opinions or orders,” he wrote, noting that he has taken a step further to ensure the policy is understood.

“In the past, my policy was communicated verbally to chambers staff, including interns. That is no longer the case. I now have a written unequivocal policy that applies to all law clerks and interns, pending definitive guidance from the AO through adoption of formal, universal policies and procedures for appropriate AI usage,” he wrote. “AO” refers to the Administrative Office of the Courts.

As noted by the Magnolia Trubine, Wingate’s order blocked a law aimed at limiting DEI- practices from taking effect. After the mistake was found, the initial order was replaced with a new one.

The opinion Neals issued that was riddled with AI-induced errors denied a request from the company CorMedix Inc. to dismiss a lawsuit from shareholders, according to Bloomberg Law.

Advertise with The Western Journal and reach millions of highly engaged readers, while supporting our work. Advertise Today.