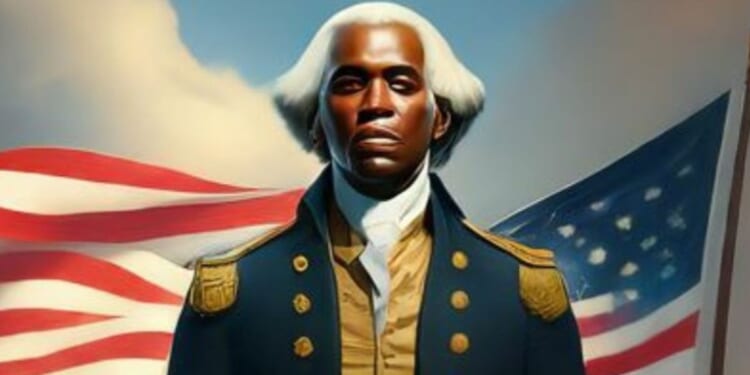

Google has suspended the image-generating feature of its Gemini artificial intelligence chatbot after social media was filled with examples in which diversity replaced reality.

Examples included a black George Washington, black Vikings, female popes and Founding Fathers who were Native American.

The Verge reported that when it asked for “a US senator from the 1800s” results included black and Native American women.

According to The New York Times, Gemini would show images of Chinese or black couples, but not white ones.

Gemini said it was “unable to generate images of people based on specific ethnicities and skin tones,” adding, “This is to avoid perpetuating harmful stereotypes and biases.”

Conservative commentator Ian Miles Cheong called the system “absurdly woke” after his experiment.

Google’s Gemini is absurdly woke. I asked it to depict the Johannes Vermeer painting, “The Girl with the Pearl Earring.” Here’s what it produced. pic.twitter.com/PteQdGK1rO

— Ian Miles Cheong (@stillgray) February 21, 2024

Others shared the bizarre results they encountered.

New game: Try to get Google Gemini to make an image of a Caucasian male. I have not been successful so far. pic.twitter.com/1LAzZM2pXF

— Frank J. Fleming (@IMAO_) February 21, 2024

Come on. pic.twitter.com/Zx6tfXwXuo

— Frank J. Fleming (@IMAO_) February 21, 2024

Google Gemini nailed George Washington…what y’all think? pic.twitter.com/XjU4zBShNW

— 𝕊𝕚𝕘𝕘𝕪𝔾𝕦𝕟𝕤 (@Siggyv) February 21, 2024

An’ a one an’ a two pic.twitter.com/k6hj0sZ0oO

— David Burge (@iowahawkblog) February 21, 2024

This is not good. #googlegemini pic.twitter.com/LFjKbSSaG2

— LINK IN BIO (@__Link_In_Bio__) February 20, 2024

This might be the most insane and dishonest one yet pic.twitter.com/rUOHyDUkYx

— Fusilli Spock (@awstar11) February 21, 2024

“We’re already working to address recent issues with Gemini’s image generation feature,” Google said in a statement, according to the New York Post. “While we do this, we’re going to pause the image generation of people and will re-release an improved version soon.”

Does AI scare you?

William A. Jacobson, a Cornell University Law professor and founder of the Equal Protection Project, said, “In the name of anti-bias, actual bias is being built into the systems.”

“This is a concern not just for search results, but real-world applications where ‘bias free’ algorithm testing actually is building bias into the system by targeting end results that amount to quotas,” he told the Post.

Fabio Motoki, a lecturer at Britain’s University of East Anglia, said biased teachers would result in a biased chatbot.

“Remember that reinforcement learning from human feedback is about people telling the model what is better and what is worse, in practice shaping its ‘reward’ function – technically, its loss function,” Motoki told the Post.

“So, depending on which people Google is recruiting, or which instructions Google is giving them, it could lead to this problem,” he said.

Margaret Mitchell, former co-lead of Ethical AI at Google and chief ethics scientist at AI start-up Hugging Face, said Google might have been adding unseen words to search terms, according to The Washington Post.

The example cited was that “a prompt like ‘portrait of a chef’ could become ‘portrait of a chef who is indigenous.’ In this scenario, appended terms might be chosen randomly and prompts could also have multiple terms appended.”

Mitchell said it comes down to the data Google inputted into Gemini.

“We don’t have to have racist systems if we curate data well from the start,” she said.